Ubisoft unveils generative AI "NEO NPCs", and the spirit of Peter Molyneux's Milo lives on

"What's in it for the player?"

Much the same as last year, AI remains the talk of the town at GDC 2024. Roblox Studio has announced a new generative AI-based character creator. Graphics card manufacturer Nvidia unveiled a new AI-powered chip, sending its stock price skyrocketing. And behind closed doors, Ubisoft demonstrated something of its own to a small collection of the press: a partnership with Inworld AI to create a prototype for "Newly Evolving NPCs", or NEO NPCs as Ubisoft calls them.

It's important to stress the "prototype" factor here. At the event, Ubisoft gave a brief presentation explaining the origins of its research team - led by Ubisoft Paris and a supporting Ubisoft Production Technology crew - and its goals, often referring to the project as an "experiment". After this I had a chance to sit down and actually "play" three, roughly 10-minute long demonstrations of the technology in action within a hypothetical game scenario. But while these demos were technically playable, they were explicitly described as an "independent proof of concept" for now. In a brief panel discussion after the hands-on time, Mélanie Lopez Malet, senior data scientist on the R&D team, said they were only just "scratching the surface... implementation [in a real game] for me is not even on my radar."

Still, this is one of the first working examples of generative AI being used in an actual, player-facing role from any major studio - and it does work. Sort of.

The full premise is that, in Ubisoft's words, the typical approach to using generative AI with NPCs - like large language model (LLM) mods for standard games that patch ChatGPT-style functionality into dialogue options - were often somewhat "empty" or "shallow" in practice. While they can technically say anything, what these NPCs say doesn't impact the game itself, and so what you end up with, as scientific director David Louapre put it, is "roleplay, not gameplay". The goal with NEO NPCs is to do something that merges the two.

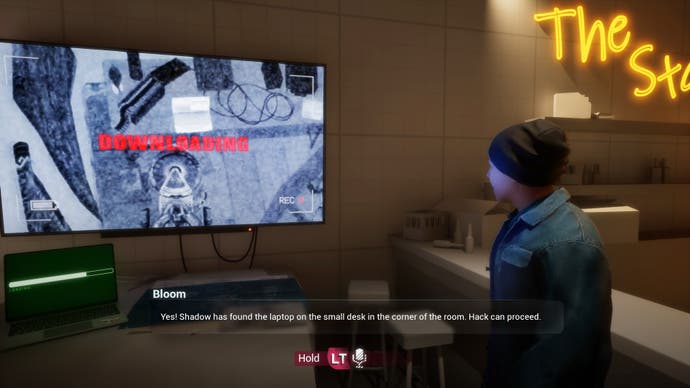

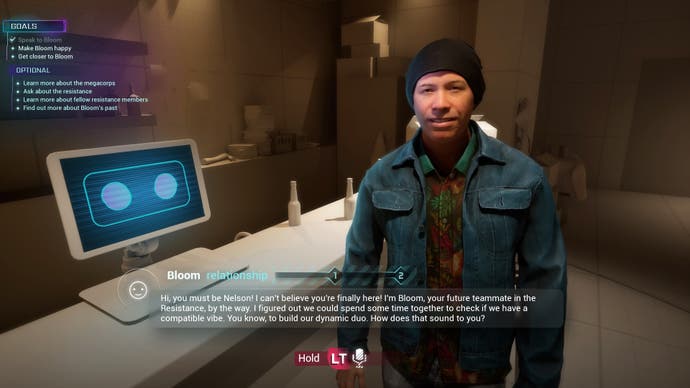

The demos were used as examples of what this technology might be used for in practice. The first involved a conversation with a character called Bloom in a slightly caricatured abstract from a hypothetical Ubisoft game. Bloom, an ageless man in a denim shirt and droopy beanie, is a member of the Resistance, and wants to get to know you to "check if we have a compatible vibe". You have a few objectives - befriending him, finding out more about certain topics, and so on - which tie into the conversation. And the conversation itself is done via voice-to-text: you hold down LT and speak into a microphone, and Bloom reacts accordingly.

In the second scenario, Bloom's chatting with you again, this team reacting in real-time to an in-game television screen showing a drone sneaking around an enemy location, controlled by a third off-screen character. Bloom reacts by yelping "enemy soldier spotted" as they appear on the monitor, while also chatting away with you and offering mission-related conversation prompts.

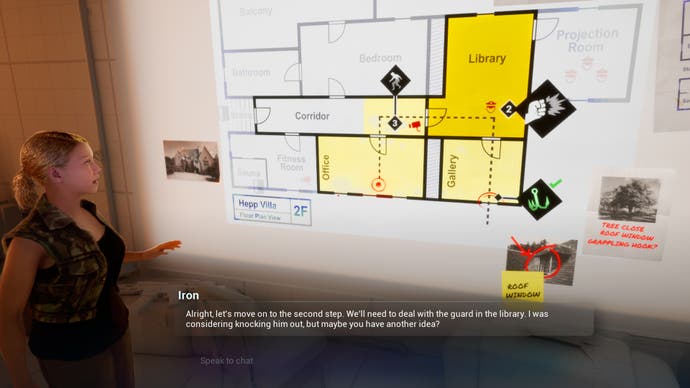

In the third scenario, we're chatting with a different character called Iron. In a GTA heist-style setup, we're looking at a planning board that displays the floorplan of an enemy base we're hoping to infiltrate. Iron asks us how we want to approach it and reacts accordingly to whatever we say, ultimately agreeing on one of a set list of actually playable options - for instance, using the tree by the window to grapple-hook into the building, or using the smoke grenades for cover, which we noticed were lying around the room and offered up as a suggestion.

The first impression from all of this - and one that's actually come up in a few conversations with more than one other journalist who saw the demo - is that this is a little familiar. Peter Molyneux, if you remember that infamous moment on the E3 2009 stage, promised something rather similar with his doomed artificial intelligence friend, Milo.

Doomed or not, like any self-respecting mammal, my first response to engaging in conversation with an artificial entity pretending to be a member of my species was to relentlessly poke fun at it - or at the very least, test the boundaries of its logic and see where it might fail. The results were mixed, and gave a good insight into what NEO NPCs limitations might be. Along with another journalist, when Bloom asked us about any specialist skills we might be able to bring to the resistance, we suggested pole-vaulting and smooth-talking, to which he replied with a possible-accidental bit of wordplay about being excited to "take that leap" together with us.

As we progressed into the second and third demos, the characters remembered what we spoke about earlier on. But despite our best attempts to suggest finding a large pole to propel ourselves through the upper windows of the enemy compound, we were always steered back to playable in-game options - "I don't think we have a pole that large". The same went for our suggestions of finding a large cardboard box, putting it over our heads, crouching down, and using that to sneak past the guards: "Sounds like a good idea, but I don't think we'll be able to find a cardboard box big enough to fit the both of us," observed Iron, astutely.

Being very early prototypes, conversations also bumped up against the usual limitations with LLM-driven chatbots. Very little of the dialogue spoken would pass a human editor, for instance. Maybe we could "go through the window on the gallery's window," suggested Iron at one point. "Oh Nelson, you sure know how to win over the resistance," demurred Bloom earlier on. Virginie Mosser, narrative director on the research team, described her job as being something like a director giving prompts to "improv actors" - giving them personalities, backgrounds, and detailed briefs from which they blossom outwards. "As a writer, seeing my characters come to life and actually converse with me for the first time has been one of the most fulfilling and touching moments of my career," she's quoted as saying in the press release. There's no doubt it must be an astounding moment to have open conversations with a character you've created, but as the old film saying goes, a director's only as good as their actors.

What's more interesting is the somewhat existential dilemma that sits right at the heart of the prototype itself. Yes, the NPCs can react with near-infinite possibilities to whatever you say to them, and they can subsequently tether those reactions to actual changing in-game objectives, scenarios, quests and so on. But the thing that Ubisoft claims sets these NEO NPCs aside from chatbots is the fact they are ultimately positioned within "walls" set by humans. So you can suggest scuttling by the guards in a box, but unless that's one of the 20-odd pre-set solutions in this scenario, you can't actually do it. (Actually, when I tested this by informing Iron I would refuse to go on the mission unless she let me use the large cardboard box strategy, she relented - something a Ubisoft expert told me was an error).

What you're left with is something very similar to a standard game, where you search the environment for clues as to what might be intractable or usable, offer them up until a character says yes, and proceed with that pre-programmed choice. All of the infinite conversation on top of that is effectively colour for the sake of your immersion - roleplay, but still not really gameplay.

There are also limits with how this could work without voice-to-text as an input (or maybe using a keyboard) - something Ubisoft itself noted wouldn't really be the preferred option for most players. But removing the voice element would mean reverting back to standard game design strategies again: having a set list of dialogue options, maybe some of them hidden until you discover something in game to trigger their availability, and simply scrolling through the ones you want to say.

From left to right: Mélanie Lopez Malet (Senior Data Scientist), David Louapre, (Scientific Director), Emmanuel Astier (Senior Lead Programmer), Xavier Manzanares (Producer), Virginie Mosser (Narrative Director)

And of course on top of that, there remain the same questions as ever when generative AI is involved. There was no mention of the training data for the models in use, for instance. An answer on the FAQ section of Inworld AI's website, to the question of whether it's run off Open AI's large language models such as GPT-4 and ChatGPT (currently the subject of a copyright infringement lawsuit from The New York Times and others), says this - although it's worth noting things may be different for the technology specifically used with this Ubisoft partnership:

"We have 20+ models that drive our characters. This includes non-LLM models like emotions, text-to-speech, speech-to-text, and more. For LLMs, we dynamically switch between LLM APIs and our own proprietary models depending on which is best for the conversational context and latency. Our goal is to provide you with the best and fastest answers. We understand the strengths of the individual LLM APIs and our own models, and dynamically query the one that will provide the best character experience.

The result, as Ubisoft's team said themselves at the event, is a prototype that relies more on the notion of what it "could be" in the future than what it really is now. There were suggestions that NEO NPCs of the future could have something closer to "vision" than current types of discovery, for instance, or more intricate linking between what those NPCs discover or encounter and how they behave.

In that sense, it's worth giving it some benefit-of-the-doubt: Ubisoft was at pains to stress this was about asking "what's in it for the creative; what's in it for the player?", and the attempts are there to try and match the technology to an actual gameplay use. And again, these demos were effectively just mock-ups, playable proof-of-concepts, and so it's important to judge them on what they illustrate about the technology rather than the individual quest design at hand.

But it also comes back to another issue with some - not all - forms of AI technology, particularly in video games, and many of the other supposedly-revolutionary ideas of recent times. For the time being, this all remains purely speculative. This is a tool that's been built first - on the notion that if we build it, someone clever will surely come along and make use of it. There's an argument to say that's somewhat back-to-front: the best tools are solutions to problems those clever people already have.

Speaking at the same NEO NPC press briefing, Ubisoft boss Yves Guillemot described generative AI as just another new technology to be tested.

_9umpgD2.jpg?width=291&height=164&fit=crop&quality=80&format=jpg&auto=webp)

_m1xb4JO.png?width=291&height=164&fit=crop&quality=80&format=jpg&auto=webp)